Efficiency is essential to support responsiveness w.r.t. ever-growing datasets, especially for Deep Learning (DL) systems. DL frameworks have traditionally embraced deferred execution-style DL code—supporting symbolic, graph-based Deep Neural Network (DNN) computation. While scalable, such development is error-prone, non-intuitive, and difficult to debug. Consequently, more natural, imperative DL frameworks encouraging eager execution have emerged but at the expense of run-time performance. Though hybrid approaches aim for the “best of both worlds,” using them effectively requires subtle considerations to make code amenable to safe, accurate, and efficient graph execution—avoiding performance bottlenecks and semantically inequivalent results. We discuss the engineering aspects of a refactoring tool that automatically determines when it is safe and potentially advantageous to migrate imperative DL code to graph execution and vice-versa.

![Introduction Motivation Implementation Evaluation Conc.

Deep Learning Systems & Run-time Performance

Machine Learning (ML), including Deep Learning (DL), systems are

pervasive.

As datasets grow, efficiency becomes essential to support

responsiveness [Zhou et al., 2020].

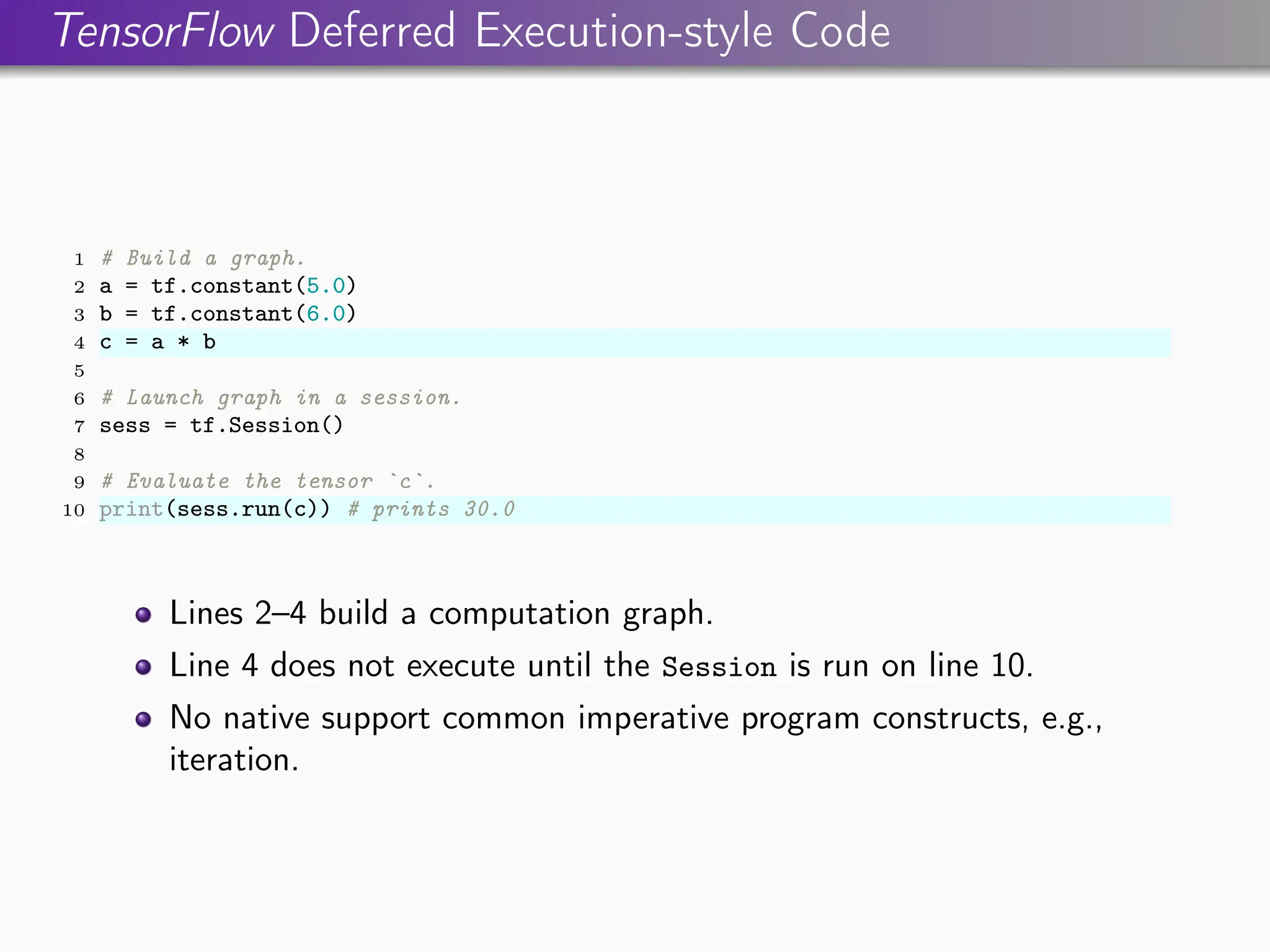

For efficiency, DL frameworks have traditionally embraced a deferred

execution-style supporting graph-based (DNN) computation.

Scalable, but development is . . .

Error-prone.

Cumbersome.

Produces programs that are difficult to debug.

Because graph computation executes statements in a non-imperative

order, traditional SE tools cannot help troubleshoot bugs [Arpteg

et al., 2018].

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 2 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-2-2048.jpg)

![Eager TensorFlow Imperative (OO) DL Model Code

1 class SequentialModel(tf.keras.Model):

2 def __init__(self, **kwargs):

3 super(SequentialModel, self).__init__(...)

4 self.flatten = layers.Flatten(input_shape=(28, 28))

5 num_layers = 100 # Add many small layers.

6 self.layers = [layers.Dense(64, activation = "relu") for n in

range(num_layers)]

,

→

7 self.dropout = tf.keras.layers.Dropout(0.2)

8 self.dense_2 = tf.keras.layers.Dense(10)

9

10

11 def __call__(self, x):

12 x = self.flatten(x)

13 for layer in self.layers:

14 x = layer(x)

15 x = self.dropout(x)

16 x = self.dense_2(x)

17 return x](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-7-2048.jpg)

![Hybridized TensorFlow Imperative (OO) DL Model Code

1 class SequentialModel(tf.keras.Model):

2 def __init__(self, **kwargs):

3 super(SequentialModel, self).__init__(...)

4 self.flatten = layers.Flatten(input_shape=(28, 28))

5 num_layers = 100 # Add many small layers.

6 self.layers = [layers.Dense(64, activation = "relu") for n in

range(num_layers)]

,

→

7 self.dropout = tf.keras.layers.Dropout(0.2)

8 self.dense_2 = tf.keras.layers.Dense(10)

9

10 @tf.function(...) # Executes model as graph (optional args).

11 def __call__(self, x):

12 x = self.flatten(x)

13 for layer in self.layers:

14 x = layer(x)

15 x = self.dropout(x)

16 x = self.dense_2(x)

17 return x

On line 10, AutoGraph used to potentially enhance performance.

Decorates model’s call() method with @tf.function.

At run-time, call()’s execution will be “traced” (∼9.22 speedup).](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-8-2048.jpg)

![Hybridized TensorFlow Imperative (OO) DL Model Code

1 class SequentialModel(tf.keras.Model):

2 def __init__(self, **kwargs):

3 super(SequentialModel, self).__init__(...)

4 self.flatten = layers.Flatten(input_shape=(28, 28))

5 num_layers = 100 # Add many small layers.

6 self.layers = [layers.Dense(64, activation = "relu") for n in

range(num_layers)]

,

→

7 self.dropout = tf.keras.layers.Dropout(0.2)

8 self.dense_2 = tf.keras.layers.Dense(10)

9

10 @tf.function(...) # Executes model as graph (optional args).

11 def __call__(self, x):

12 x = self.flatten(x)

13 for layer in self.layers:

14 x = layer(x)

15 x = self.dropout(x)

16 x = self.dense_2(x)

17 return x

On line 10, AutoGraph used to potentially enhance performance.

Decorates model’s call() method with @tf.function.

At run-time, call()’s execution will be “traced” (∼9.22 speedup).](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-9-2048.jpg)

![Hybridized TensorFlow Imperative (OO) DL Model Code

1 class SequentialModel(tf.keras.Model):

2 def __init__(self, **kwargs):

3 super(SequentialModel, self).__init__(...)

4 self.flatten = layers.Flatten(input_shape=(28, 28))

5 num_layers = 100 # Add many small layers.

6 self.layers = [layers.Dense(64, activation = "relu") for n in

range(num_layers)]

,

→

7 self.dropout = tf.keras.layers.Dropout(0.2)

8 self.dense_2 = tf.keras.layers.Dense(10)

9

10 @tf.function(...) # Executes model as graph (optional args).

11 def __call__(self, x):

12 x = self.flatten(x)

13 for layer in self.layers:

14 x = layer(x)

15 x = self.dropout(x)

16 x = self.dense_2(x)

17 return x

On line 10, AutoGraph used to potentially enhance performance.

Decorates model’s call() method with @tf.function.

At run-time, call()’s execution will be “traced” (∼9.22 speedup).](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-10-2048.jpg)

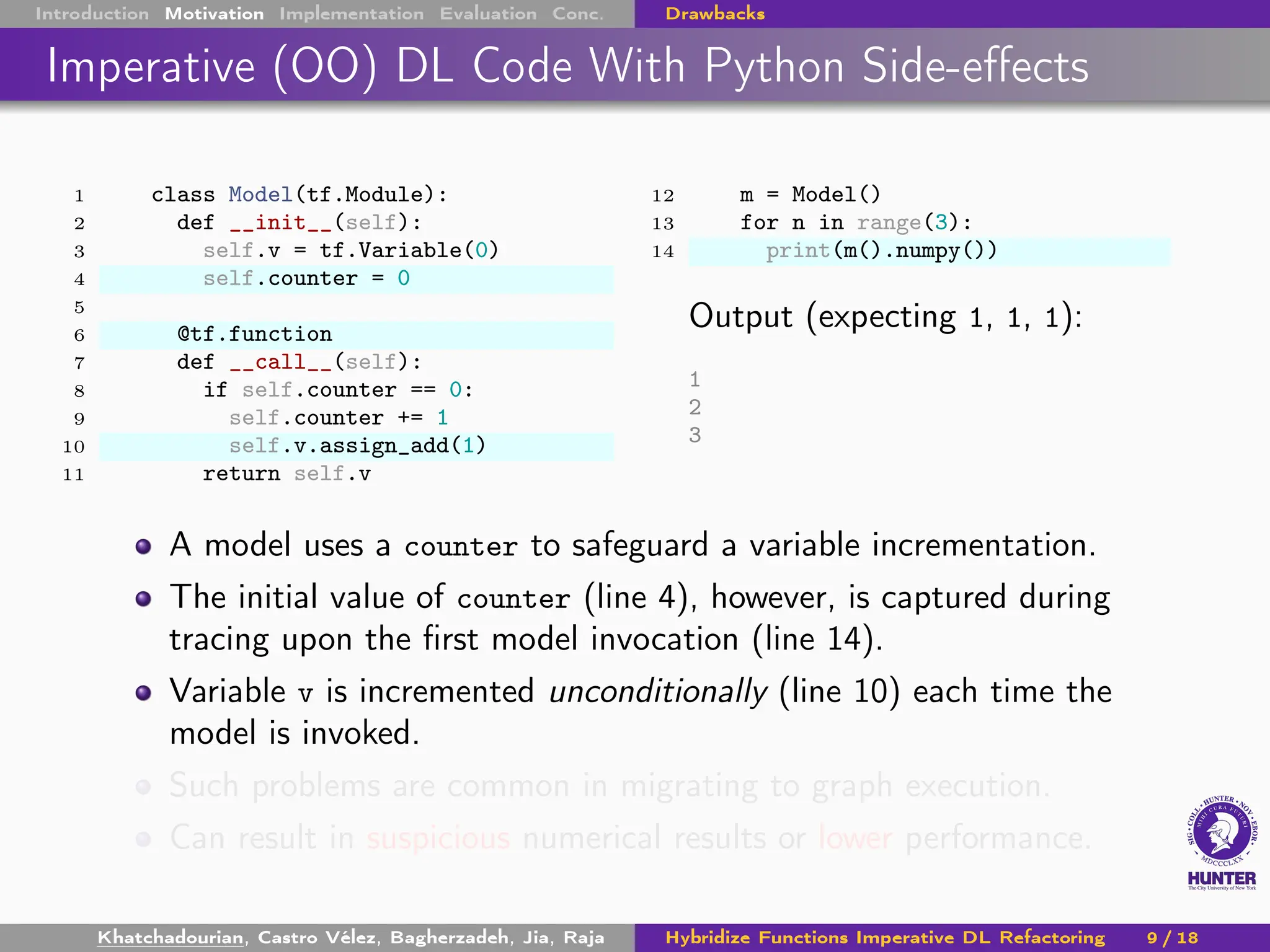

![Introduction Motivation Implementation Evaluation Conc. Drawbacks

Hybridization Drawbacks

Needs non-trivial, specialized metadata [Jeong et al., 2019].

Exhibit limitations and known issues with native program constructs.

Subtle considerations required to:

Specify (decorate) the functions to be migrated.

Make code amenable to safe, accurate, and efficient graph execution.

Avoid performance bottlenecks and semantically inequivalent

results [Cao et al., 2022,Castro Vélez et al., 2022].

Manual analysis and refactoring (semantics-preserving,

source-to-source transformation) for optimal results can be error-

and omission-prone [Dig et al., 2009].

Further complicated by:

Increasing Object-Orientation (OO) in DL model code (e.g.., Keras).

Dynamically-typed languages (e.g., Python).

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 7 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-11-2048.jpg)

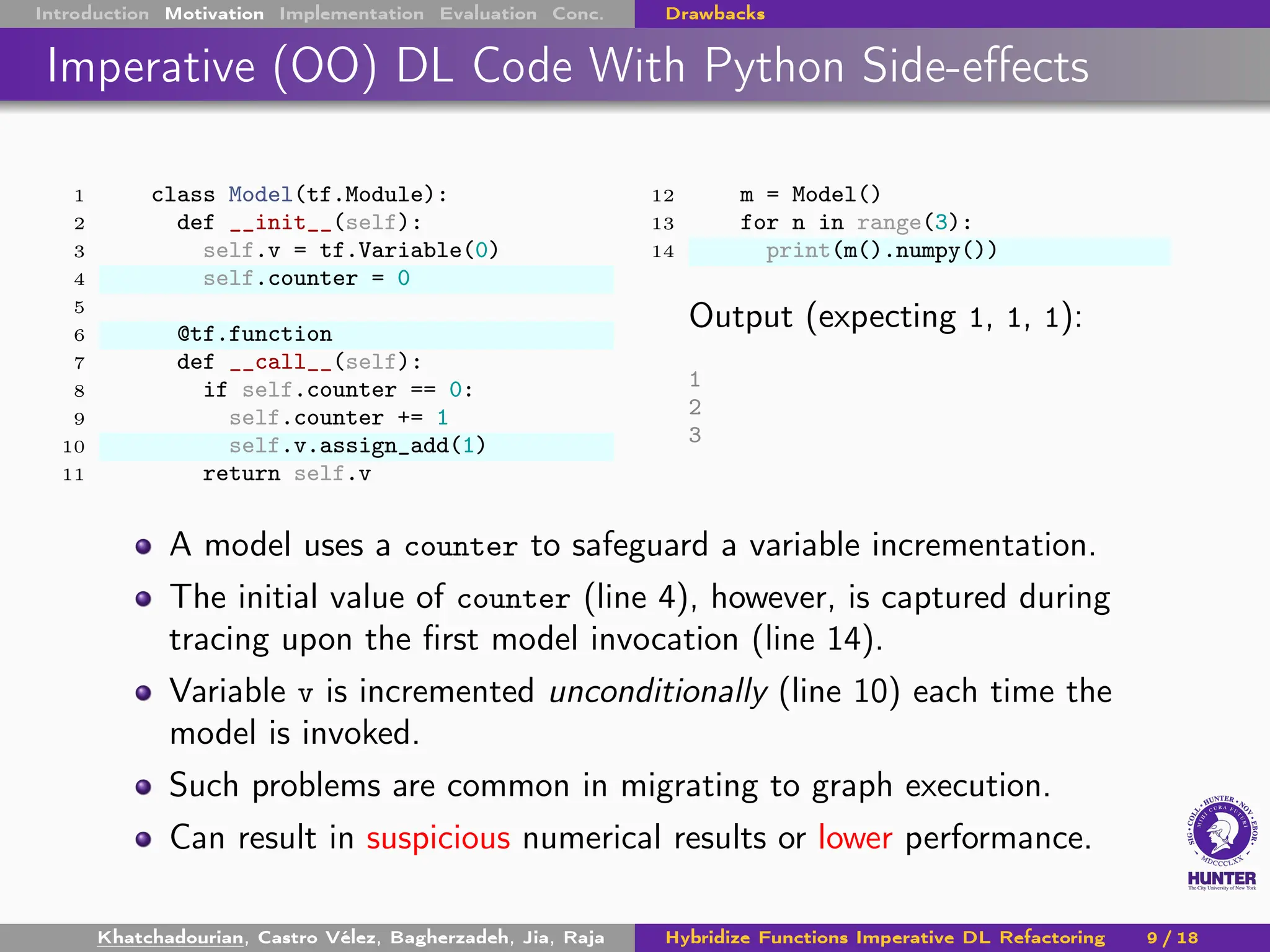

![Introduction Motivation Implementation Evaluation Conc. Insight Refactorings Approach

Problem Insight

Although imperative DL code is sequentially executed, hybridizing code

resembles parallelizing sequential code.

Example

To void unexpected behavior, like concurrent programs, hybrid functions

should avoid side-effects.

Idea

Adapt concepts from automated refactorings that parallelize sequential

code, e.g., Streaming APIs [Khatchadourian et al., 2019].

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 10 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-18-2048.jpg)

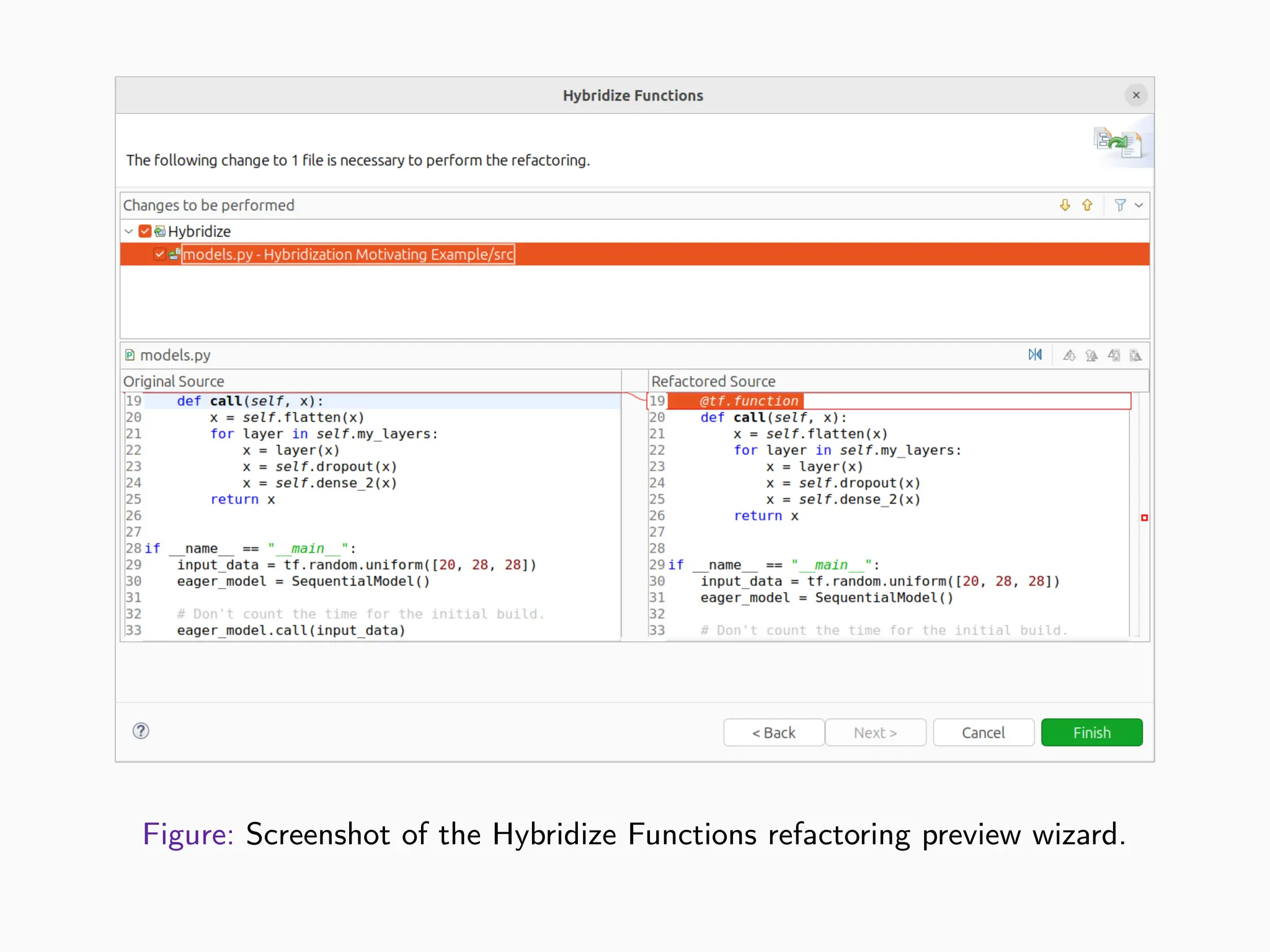

![Approach Highlights

Novel tensor analysis for imperative DL code.

Current analyzers work on only procedural (TF 1) code.

Modernization of WALA Ariadne [Dolby et al., 2018] for imperative

(TF 2) code.

Implemented as a PyDev Eclipse IDE plug-in [Zadrozny, 2023].

Integrates Ariadne for tensor type inference analysis.

Leverages complementary speculative analysis [Zhou et al., 2020]

using contextual DL keywords for difficult static cases.](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-20-2048.jpg)

![Architecture & Dependencies

Eclipse leveraged for its refactoring framework and test

engine [Bäumer et al., 2001].

PyDev used for efficient indexing, refactoring support, and that it is

open-source for all Python development.

WALA used for static analyses (ModRef) used to build our

side-effect analysis.

WALA Ariadne used for Python analysis, tensor type inference, and

(TensorFlow) library modeling.](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-21-2048.jpg)

![Introduction Motivation Implementation Evaluation Conc. Insight Refactorings Approach

Modernizing Ariadne: New Enhancements

Python module packages.

Wild card imports.

Intra-package references (relative imports; from .. import X).

Package initialization scripts.

Automatic unit test entry points discovery.

Non-scalar tensor dataset [Google LLC, 2023] iteration.

Modeling of additional libraries.

Static and class methods analysis.

Analysis of custom decorators.

Callable object (functor) analysis (used in Keras).

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 16 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-24-2048.jpg)

![Introduction Motivation Implementation Evaluation Conc.

For Further Reading I

Abadi, Martín et al. (2016). “TensorFlow: A System for Large-Scale Machine Learning”. In: Symposium on

Operating Systems Design and Implementation.

Agrawal, Akshay et al. (2019). TensorFlow Eager: A Multi-Stage, Python-Embedded DSL for Machine

Learning. arXiv: 1903.01855 [cs.PL].

Apache (Apr. 8, 2021). Hybridize. Apache MXNet documentation. url:

https://mxnet.apache.org/versions/1.8.0/api/python/docs/tutorials/packages/gluon/blocks/hybridize.html (visited

on 04/08/2021).

Arpteg, A., B. Brinne, L. Crnkovic-Friis, and J. Bosch (2018). “Software Engineering Challenges of Deep

Learning”. In: Euromicro Conference on Software Engineering and Advanced Applications. IEEE, pp. 50–59.

doi: 10.1109/SEAA.2018.00018.

Bäumer, Dirk, Erich Gamma, and Adam Kiezun (Oct. 2001). “Integrating refactoring support into a Java

development tool”. url: http://people.csail.mit.edu/akiezun/companion.pdf (visited on 09/10/2024).

Cao, Junming, Bihuan Chen, Chao Sun, Longjie Hu, Shuaihong Wu, and Xin Peng (2022). “Understanding

Performance Problems in Deep Learning Systems”. In: FSE. FSE ’22. ACM, pp. 357–369. doi:

10.1145/3540250.3549123.

Castro Vélez, Tatiana, Raffi Khatchadourian, Mehdi Bagherzadeh, and Anita Raja (May 2022). “Challenges

in Migrating Imperative Deep Learning Programs to Graph Execution: An Empirical Study”. In: MSR. MSR

’22. ACM/IEEE. ACM. doi: 10.1145/3524842.3528455.

Chen, Tianqi, Mu Li, Yutian Li, Min Lin, Naiyan Wang, Minjie Wang, Tianjun Xiao, Bing Xu,

Chiyuan Zhang, and Zheng Zhang (2015). “MXNet: A Flexible and Efficient Machine Learning Library for

Heterogeneous Distributed Systems”. In: Workshop on Machine Learning Systems at NIPS. arXiv: 1512.01274

[cs.DC].

Chollet, François (2020). Deep Learning with Python. 2nd ed. Manning.

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 18 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-27-2048.jpg)

![Introduction Motivation Implementation Evaluation Conc.

For Further Reading III

Moldovan, Dan, James M. Decker, Fei Wang, Andrew A. Johnson, Brian K. Lee, Zachary Nado, D. Sculley,

Tiark Rompf, and Alexander B. Wiltschko (2019). AutoGraph: Imperative-style Coding with Graph-based

Performance. arXiv: 1810.08061 [cs.PL].

Negara, Stas, Nicholas Chen, Mohsen Vakilian, Ralph E. Johnson, and Danny Dig (2013). “A Comparative

Study of Manual and Automated Refactorings”. In: ECOOP. Ed. by Giuseppe Castagna. Berlin, Heidelberg:

Springer Berlin Heidelberg, pp. 552–576. isbn: 978-3-642-39038-8.

OpenAI, Inc. (Aug. 18, 2023). ChatGPT. url: https://chat.openai.com (visited on 08/18/2023).

Paszke, Adam et al. (Dec. 3, 2019). PyTorch: An Imperative Style, High-Performance Deep Learning

Library. arXiv: 1912.01703 [cs.LG].

WALA (Sept. 8, 2024). T.J. Watson Libraries for Analysis. original-date: 2012-04-05T18:57:03Z. url:

https://github.com/wala/WALA (visited on 09/10/2024).

Zadrozny, Fabio (Apr. 15, 2023). PyDev. url: https://www.pydev.org (visited on 05/31/2023).

Zhou, Weijie, Yue Zhao, Guoqiang Zhang, and Xipeng Shen (2020). “HARP: Holistic Analysis for

Refactoring Python-Based Analytics Programs”. In: ICSE. doi: 10.1145/3377811.3380434.

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 18 / 18](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-29-2048.jpg)

![Appendix Static Analysis Refactoring LLMs Notebooks

Refactoring Developer Adoption

Developers generally underuse automated refactorings [Kim et al.,

2012,Negara et al., 2013].

Data scientists and engineers may be more open to using automated

(refactoring) tools.

Our approach will be fully automated with minimal barrier to entry.

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 4 / 6](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-33-2048.jpg)

![Appendix Static Analysis Refactoring LLMs Notebooks

LLMs & Big Data Refactoring

LLMs [OpenAI, Inc., 2023] can also perform refactorings.

Other Big Data-driven refactorings [Dilhara et al., 2022] are exciting

and promising.

Obtaining a (correct) dataset large enough to automatically extract

the proposed refactorings is challenging as developers struggle with

(manually) migrating DL code to graph execution [Castro Vélez

et al., 2022].

LLM inference capabilities are currently limited.

LLMs have a token limitation.

Hybridization requires interprocedural analysis.

Khatchadourian, Castro Vélez, Bagherzadeh, Jia, Raja Hybridize Functions Imperative DL Refactoring 5 / 6](https://crownmelresort.com/image.slidesharecdn.com/presentation-250506160829-168353d2/75/Hybridize-Functions-A-Tool-for-Automatically-Refactoring-Imperative-Deep-Learning-Programs-to-Graph-Execution-34-2048.jpg)